Many negative consequences flow reliably from a financial crisis, including unemployment, political turmoil, and piles of sovereign debt. Since the 2008 financial meltdown, however, we’ve seen none of the good consequences—and there are supposed to be good ones. Crashes and severe recessions often are followed by bursts of innovation that lay the groundwork for several decades of future growth and productivity increases. Severe economic downturns can perform a vital cleansing for the economy, toppling unchallengeable market positions and clearing a path for newcomers with disruptive ideas. The economic transformations that followed major worldwide crashes prior to 2008—in 1873, 1929, and 1973—were breathtaking. Indeed, the 1870s, 1930s, and 1970s were among the most innovative decades in history. The 1930s, for example, remembered mostly for the Great Depression, were also a time of great technological progress, in areas such as jet engines, synthetic materials, television, and computers. The 1970s saw enormous advances in personal computing, the digital camera, the Internet and e-mail (via the ARPANET), automotive technology (such as antilock brakes), phones that were truly mobile (even if you weren’t in a car), CAT and MRI scans, recombinant DNA, and IVF.

Yet here we are, nearly a decade after the worst financial crisis in modern memory, and we’ve seen few of these kinds of benefits. Don’t let heady stock prices, record corporate profits, and low unemployment fool you. America is only now emerging from a lost decade. Instead of renewal, the last ten years were blighted by slow growth, stagnant productivity, limited social mobility, long-term unemployment and underemployment, and despair.

Finally, a reason to check your email.

Sign up for our free newsletter today.

The economic legacy of the last decade is excessive corporate consolidation, a massive transfer of wealth to the top 1 percent from the middle class, the creation of even more asset bubbles, and rising social tensions. America is incredibly resilient. We are not Japan. We are recovering. But the Federal Reserve Board, the government body charged with establishing the monetary conditions for economic recovery, has hampered it at every stage. The Fed is not solely responsible for America’s lost decade—the impact of its misguided policies was compounded by other factors, including regulatory and congressional capture and crony capitalism. Yet the central bank did play a central role. There is little evidence to suggest that it recognizes this fact, or that recent appointments will challenge the status quo.

Economic historians will debate the causes of the 2007–08 financial crisis for years, but it’s clear that a flood of cheap money and a breakdown of oversight had inflated a gigantic asset bubble, the bursting of which triggered the crisis. During the boom years, no one noticed the growing problem. But things began going bad in 2007; and 2008, of course, brought catastrophe. Insisting initially that the economy had entered a normal postwar recession, the Fed acted with uncertainty, helping some big banks but allowing Lehman Brothers to fail—which brought the financial system to the brink of collapse. Ben Bernanke’s Federal Reserve, along with Hank Paulson’s Treasury, then acted fast to prop up the system, hoping to avoid a total meltdown.

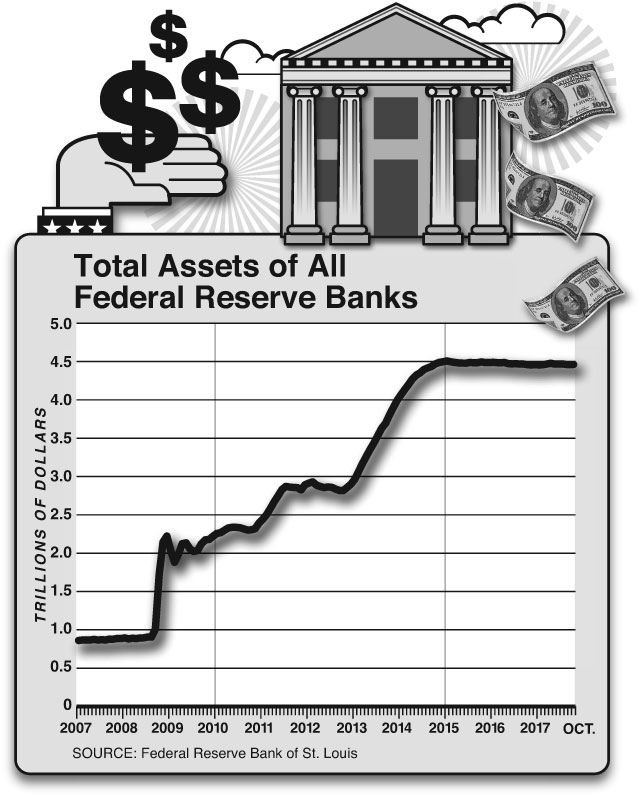

Averting a crisis was necessary. But the Fed and the Treasury did not stop there. They wound up bailing out huge Wall Street banks that contribute little to the productive “real” economy, along with insurers (AIG) and huge and inefficient industrial companies. Embarking on a new policy of “quantitative easing” to bolster the lending market, the Fed blew up its balance sheet by purchasing debt to keep interest rates down, certain that productive borrowing would ensue. Before the crash, at the end of July 2008, the Fed’s assets were $0.9 trillion. By July 2017, they had ballooned to $4.5 trillion (see graph above). These measures yielded little. Near-zero rates did not spur an expansion of jobs or of productivity-enhancing research. Instead, the easy money went to other uses, including the funding of mergers and acquisitions among giant companies and private-equity-sponsored, highly leveraged buyouts.

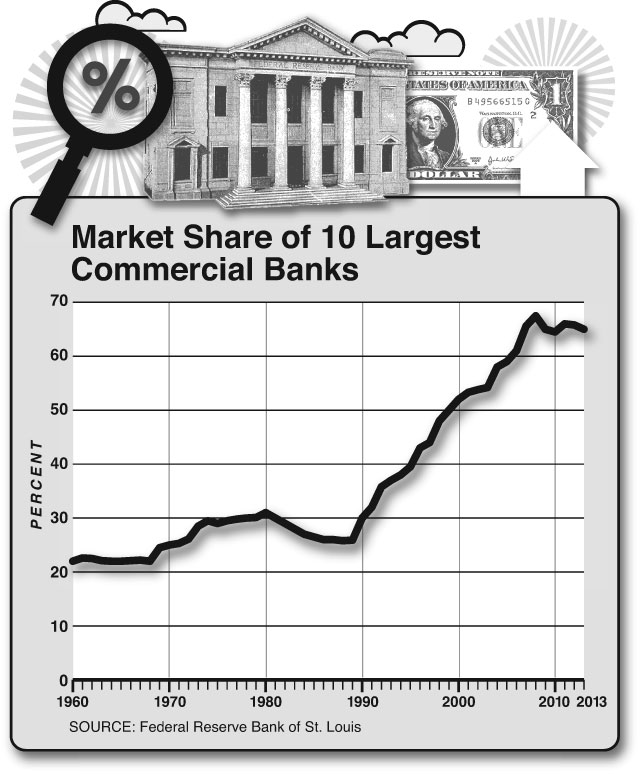

It is community and regional banks, along with private credit companies and lenders, that drive growth by lending money to smaller enterprises to build out productive capacity. But the Fed and the Treasury concentrated on helping the institutions that they knew best—the mega-banks that, by 2008, had largely exited the business of lending to smaller companies and were focused on securities trading, proprietary investing, and serving large enterprises, governments, and financial institutions. The mega-banks’ market share has relentlessly increased (see graph above).

After eight years of Fed support, plus increased concentration and wide regulatory moats, the profitability of the huge banks has more than recovered—but with what results? The overall economy saw no obvious benefit. Meantime, the community and regional banking system atrophied. In the past, the resulting loss in lending would have been filled by new, locally focused banks. This time, ultralow interest rates and expensive new regulations made starting new banks almost impossible. The number of new banks plummeted from an average of 200 each year prior to 2008 to fewer than one a year since the crash. Lending to small businesses shrank.

At the same time, cheap credit helped large companies create monopolies and oligopolies across many industry segments, resulting in a level of concentration and monopoly profits in the American economy not seen in over 100 years. This, again, was not just the Federal Reserve’s fault. The decline of American antitrust enforcement, going back to the 1990s—permitting levels of consolidation and anticompetitive practices that wouldn’t have been tolerated 30 years ago—added to the problem, as did the smothering tangle of laws and regulations that descended on American business from every level of government, which disproportionately affected smaller, innovative firms while benefiting incumbents.

The ready availability of cheap money to large enterprises fueled stock dividends and financial engineering far more than investment. Altogether, these maneuvers drove record profits for large companies and helped fuel a bullish stock market but did not create jobs—especially well-paying jobs.

In retrospect, everything that the Fed did in response to the crisis entrenched the status quo. Though the mega-banks were insolvent, the government chose not to restructure them directly and instead provided them with a “too big to fail” guarantee, letting them borrow at almost no cost and rebuild their capital without imposing obligations. For their part, the regional and community banks and credit companies that actually financed job creation faced substantially increased regulatory burdens and pressure to reduce their loan exposures. Further, the central bank failed to grasp the critical role of credit pipelines, which enable smaller institutions to resell loans, thereby freeing up balance-sheet capacity to make more loans. These collapsed. As well, lending between banks ground to a halt, drying up a market that provided these smaller institutions with access to liquidity, without which they had to keep more short-term assets on hand, thus further reducing their loan portfolios.

“The post-2008 recovery may be the weakest that America has ever had—even compared with the post-1929 recovery.”

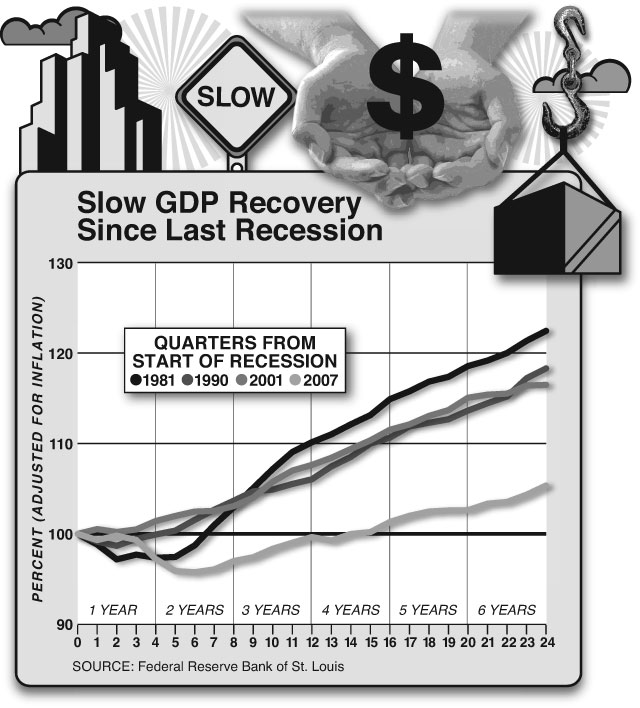

Given the severity of the Great Recession, we might have expected far better. The historical pattern has been that the most severe downturns heralded the most robust recoveries. And those robust rebounds normally happened fast. In a 2017 paper, “Deep Recessions, Fast Recoveries, and Financial Crises: Evidence from the American Record,” economists Michael Bordo and Joseph Haubrich write that “recessions associated with financial crises are generally followed by rapid recoveries.” Not this time. In 2014, economist John B. Taylor, an influential scholar of monetary policy who was considered to succeed Janet Yellen as Fed chief, said that the U.S. recovery “remains an outlier, as one of the few cases where output did not return to the level of the previous peak after the duration of the recession” (see graph below). In fact, the post-2008 recovery may be the weakest that America has ever had—even compared with the post-1929 recovery.

A main culprit was all that cheap money. Economist Sébastien E. J. Walker and I studied the impact of ultralow interest rates on economic growth, publishing our findings in 2012. Despite an almost religious faith among economists and central bankers in the benefits of near-zero rates, an extensive search of the literature failed to turn up any previous empirical studies showing that ultralow rates were beneficial. The conventional and unchallenged economic wisdom—the lower the rate, the greater the subsequent growth—was wrong, at least at recent rate levels. It’s true that, when adjusted for other variables, lower real interest rates were associated with faster economic growth in subsequent periods—but only up to a point.

Contrary to expectations, rates below the normal rate actually retarded subsequent growth. The relationship between real interest rates and subsequent economic growth is defined by a curve, not an upward sloping line. Ultralow rates ease the pressure on enterprises to adopt productivity-enhancing innovations, restructure inefficient operations, and dispose of unproductive assets. That’s a major reason that productivity growth has been so poor. Ultralow rates also distort capital allocation. Governments overborrow, and large corporations favor debt over equity in their capital mix. Speculation abounds. Yet because of the bifurcation of the credit markets caused by the withdrawal of smaller lending institutions, interest rates are far from zero for small and medium-size businesses, which, through this entire period, have paid relatively high rates.

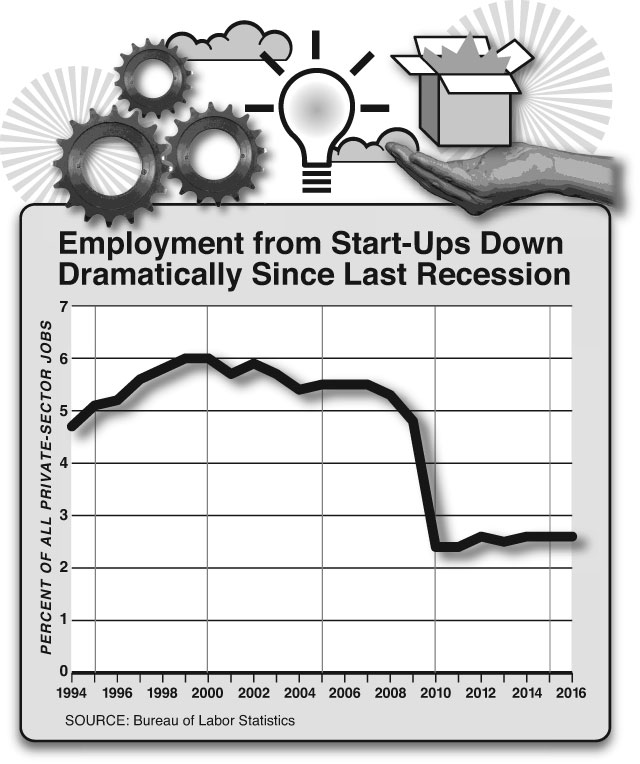

Job growth historically has come from innovative new enterprises. The number of new firms has fallen substantially since the crash. The percentage of employment in start-ups has dropped dramatically (see graph below). Limited access to credit by smaller enterprises is a factor in this decline, as are the intertwined constraints of low growth prospects, high costs of regulation and health care, and difficulties penetrating markets against entrenched players and limited incentives for many customers to change.

Over two centuries of American history, prior credit crashes resulted in the destruction of precrash wealth, overturning ruling structures. The process was traumatic for the wealthy, yes, but it was healthy for the nation—democratizing society, spurring innovation, and bringing decades’ worth of increased productivity and job creation. None of this happened after 2008. Instead, ultralow interest rates produced a massive transfer of wealth from individual savers, beneficiaries of retirement funds, providers of annuities, insurance companies, and sponsors of defined-benefit pension plans to very wealthy investors, large corporations, and mega-banks. We’ve redirected money, in other words, from those likely to pump it back into the economy or start new businesses to those who have so much that they tend to sit on most of it or use it to buy speculative assets.

The extraordinary postcrash prosperity of the precrash superrich is altogether new in our history. Near-zero interest rates are a godsend to the wealthy, inflating their real estate, stock portfolios, bond portfolios, private equity holdings, and art collections. Middle-class and blue-collar Americans, meanwhile, haven’t made much progress, and many are worse off. Inequality has increased.

Rising income disparities have many causes, including technological innovation, global trade, selective mating, and the higher premium attached to cognitive skills in today’s economy. These alone do not account for the widening gap in incomes that followed the 2008 crash, though. Monetary policy also played a significant part. Certainly, it was a primary factor in the impairment of middle-class savings and the increase in the value of the assets of the superrich. Middle- and working-class financial security has been degraded by eight years of low interest rates, which left savings attenuated. The reduction in pension-fund income alone is staggering and closely correlated with shrinking returns on debt assets.

Yet positive change seems to be arriving at last. In Washington, the Trump administration is pushing to dismantle costly regulations that affect business. The ideological tide regarding antitrust interventions is shifting, even at the University of Chicago. Corporate taxes are being slashed, perhaps leading to repatriation of offshore funds. The state and local tax (SALT) deduction, which largely benefits the rich (and public employees) in places like New York, is being reduced or eliminated.

Despite the effects of counterproductive policies, the U.S. economy looks poised once again to embark on an innovation surge of historic proportions, one that will disrupt established players, generate substantial returns for investors, and improve the lives of working Americans. The technological foundation for historic change is already in place. The adaptability of on-demand Internet services, for example, which has already reshaped the taxi industry, will soon have similar effects on many other industries. The Internet has penetrated almost every sector of society, and rapid advances in miniaturization have driven staggering increases in the computing power, bandwidth, and cloud storage that a dollar will buy. Global bandwidth, storage, and processing capabilities increase a thousandfold each decade. That is a millionfold increase in capability since the last great wave of innovation, in the late 1990s. Data storage is now virtually free, enabling a vast range of cloud and hybrid applications. Artificial intelligence is evolving rapidly. Perhaps the most far-reaching innovations will occur in medicine, with the cheap digitization of genetics, and in business services, where enterprise-scale information technology, still operating in large part on legacy systems, will soon be replaced by advanced frameworks that can help corporations put their data to productive use, reduce expenses, and improve overall efficiency. These systems are likely to be more secure and far cheaper and easier to operate.

The conditions for growth—innovative new companies with viable business models, customers fed up with paying economic rent to entrenched vendors, and young entrepreneurial workers—have existed for years without coming to full fruition. But now, new institutions have at last emerged to provide finance for growing small and medium-size businesses. Family offices that manage the assets of rich private individuals are investing directly in promising companies. Private-debt funds are a growing presence in the economy. These investors are helping fill the place of the smaller banks.

There was hope that President Trump would nominate a new Fed leader (to replace outgoing chair Yellen) who would depart from the policies of the past decade. Unfortunately, Trump’s choice of current Fed governor Jerome Powell as the central bank’s new chairman is not likely to result in fundamental policy change. As the Washington Post reports, “Powell is widely expected to keep Yellen’s tactics largely in place, albeit with a bit more of a Wall Street touch.” More of a “Wall Street touch” is precisely what we don’t need. The next chair would best serve the American people by bringing a missing Main Street touch to the Fed and working with other agencies to rebuild the lending infrastructure for growing smaller businesses.

One way or another, though, the Fed’s protracted experiment with ultralow rates is going to end. Fed or no Fed, the next big boom seems about to burst forth. Americans remain the world’s best creative destructionists.

Photo: Gearstd/iStock